Reports of businesses preventing employees from using ChatGPT and other generative AI tools might seem like an anti-innovation stance. Especially as Microsoft’s Work Trend Index Annual Report found that 70% of people would delegate as many tasks as possible to AI if it could lessen their workloads.

But if sensitive organisational data is shared in public large language models like ChatGPT, it’s no wonder that GenAI tools pose a concern for IT leaders. Governance is needed to ensure compliance and protect data and organisational reputation.

Using Generative AI in Sales—Without Compromising Security

Adopting GenAI that is contained within your organisation is a safe starting point. That’s the critical message Microsoft is emphasising with its Copilot products.

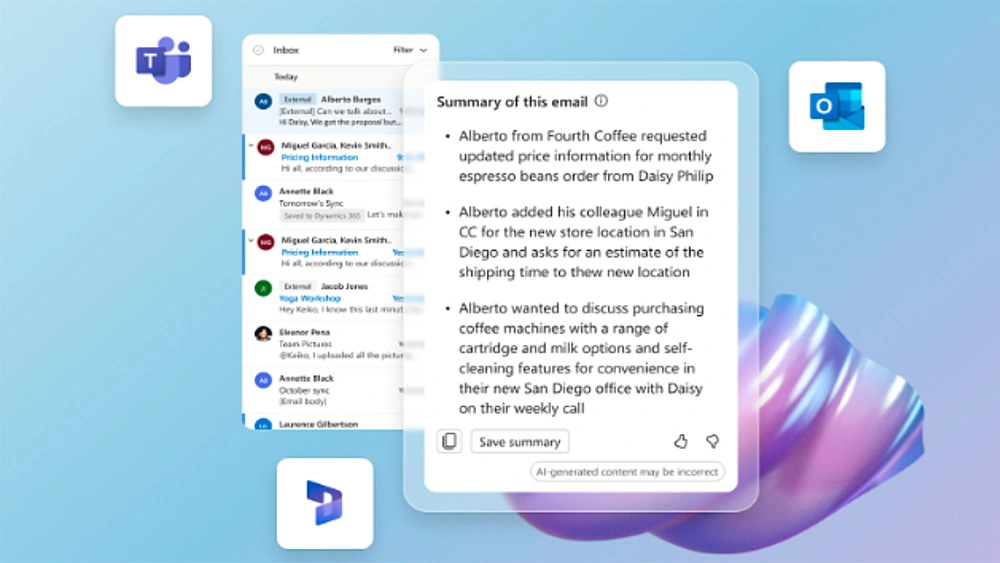

For instance, Copilot for Sales integrates across Dynamics 365, Outlook and Teams, providing lead summaries, meeting prep information, email generation and real-time coaching to help sellers save time and work more efficiently.

Let’s examine how it helps sellers capitalise on AI productivity benefits and work safely.

Data Stays Within Your Systems

Copilot works exclusively within the boundaries of your existing systems.

To ensure it doesn’t open the door to new risks, Copilot is grounded in your data and trusted resources. Copilot-specific data, including settings or generated insights, remain stored in your system.

By bringing AI directly into Outlook, Teams and Dynamics 365, Copilot assists sellers in real-time without retaining copies of data. This includes generating summaries of leads, opportunities and meetings on demand that help sellers interpret information, gain context, and reclaim time.

Aligned to Your Security Policies

Copilot doesn’t alter data governance policies. It follows your existing compliance, data retention and privacy policies across CRM and Microsoft 365 environments. This includes adhering to user roles, permissions and access controls configured in Dynamics 365 and M365.

Admins maintain control through the familiar D365 and M365 management tools. Copilot accesses CRM data within these pre-defined security policies and user permissions.

As a result, Copilot for Sales responses to an identical prompt may vary depending on individual user permission settings.

How Sales Copilot Security Works

The Copilot for Sales interface runs in Dynamics, Outlook and Teams, while the AI brainpower resides in Microsoft’s secure cloud.

Sales Copilot keeps your data contained within your systems. Behind the scenes it comprises:

- Large Language Model (LLM) hosted in Azure Open AI.

- Microsoft Graph containing user-level messages and meeting data.

- Experiences for Microsoft 365 apps including Outlook and Team.

- Connection with your sales CRM data in Dynamics 365 or Salesforce.

- External data sources can also be connected using Power Platform connectors.

From a natural language prompt, Copilot retrieves relevant information from Microsoft Graph, including emails and calendar information as appropriate. It also retrieves relevant details from the connected CRM, storing customer and opportunity information.

The combined information is assembled and presented to the LLM, which is granted temporary external knowledge to inform its response and what it already knows.

Finally, post-processing is designed to remove hallucinations and ensure accurate and appropriate responses.

This process does not store customer data in the LLM or train the model. In contrast to public GenAI tools, Copilot doesn’t involve replicating customer data lakes or system modelling.

Insights generated are immediately served to sellers and stored within your environment.

The Outlook add-in and Teams app are designed to integrate seamlessly with these underlying services that power AI capabilities. None of the code runs directly on employee devices as it is cloud-hosted and managed by Microsoft.

By meeting sellers where they work, Copilot features feel like a natural part of workflows to minimise time spent moving between interfaces. Its architecture protects data while delivering AI-powered productivity features directly within the tools sellers use daily.

Encrypted In Transit and At Rest

Copilot for Sales secures data through proven encryption technologies. This includes TLS encryption that protects all customer data in transit between Copilot components and services.

Encryption at rest follows the native capabilities of Dynamics 365 and M365. Also, encryption schemes meet regional compliance requirements for handling regulated data, including GDPR.

Responsible and Secure AI

Copilot conforms to Microsoft’s responsible AI practices and is designed to assist, not replace, human sellers. For instance, Copilot will make recommendations, but it doesn’t automatically take action unless used in combination with agents.

As we’ve highlighted, Copilot is grounded in your organisation’s data to address risks of unsafe AI output. Part of the Azure ecosystem, Copilot inherits security, compliance, and privacy policies to enforce authentication and it doesn’t use customer data to train AI models.

Next steps…

According to a report by Nucleus Research in April 2023, specialised AI technology is predicted to boost productivity by 10-35%.

Copilot aims to capitalise on these benefits by supporting sellers with automated tasks, auto-generated emails, meeting summaries, and other time-saving features.

Designed for security and privacy, Copilot is GenAI built into your cloud environment, which belongs exclusively to your organisation.

These clear distinctions separate Copilot from ChatGPT and other tools for the reassurance of embracing responsible AI that doesn’t compromise security and compliance.

Contact us today for guidance on configuring Copilot for Sales to fit into your workflows. Don’t miss out on the opportunity to boost your sales team’s productivity while safeguarding your data.

Related:

- What Early Adopters Reveal About Copilot

- How Sales Copilot Improves Dynamics 365 and Outlook Integration to Boost Productivity

- Sales Copilot Architecture Overview