Microsoft Dynamics 365 and Power Apps administrators continuously optimise their environments and seek ways to minimise storage costs.

However, a new challenge now lurks within the tools designed to enhance productivity: the accumulation of Copilot transcripts. This occurs because Copilot, while aiding user efficiency and creativity, also consumes Dataverse storage capacity.

Understanding Copilot Storage in Dataverse

Transcripts are interactions that users have with your Copilot. These logs include message activities of the content shown in a Copilot conversation, such as text and interactive summaries, and the data associated with each activity.

Dataverse directly stores Copilot transcripts using your environment’s storage capacity. As a result, each transcript consumes a portion of your Dataverse file storage.

Each conversation transcript is a CSV (comma-separated values) file, which can be viewed and exported from the Power Apps portal (requires the transcript viewer security role).

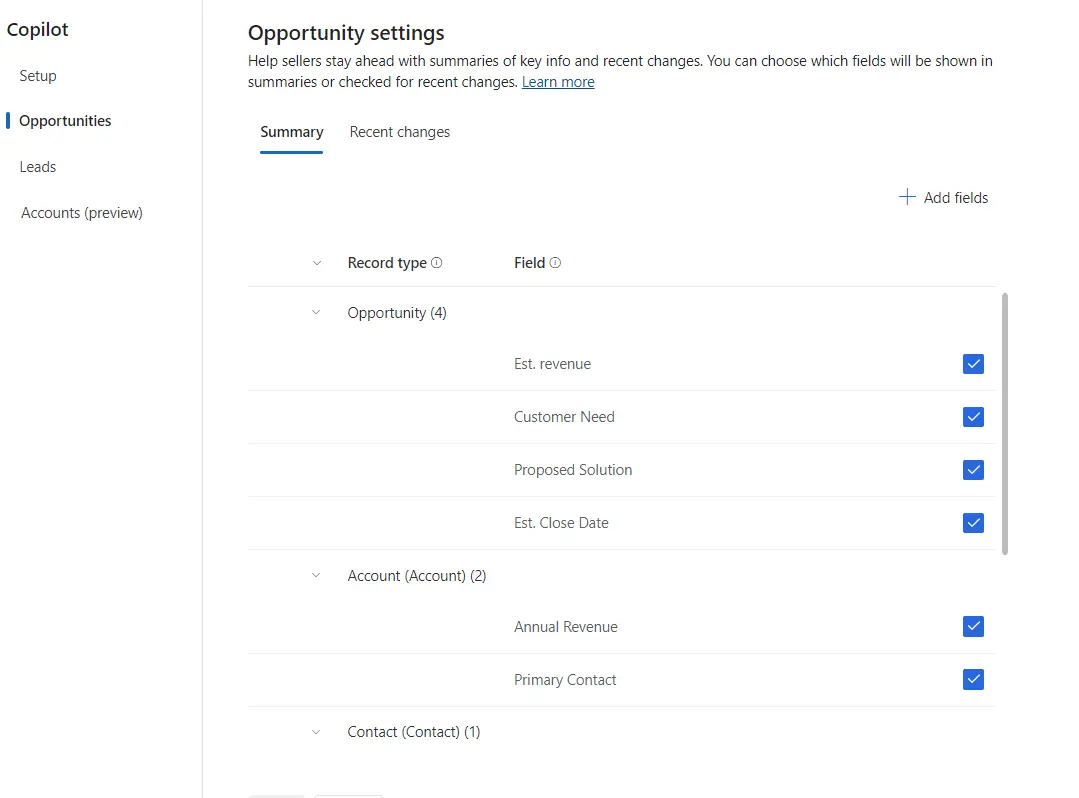

The size of transcript files will depend on Copilot usage and configuration within your organisation. This includes the number of fields shown in Copilot summaries or checked for changes.

For instance, Copilot in Dynamics 365 Sales uses a default set of fields for leads and opportunities. Within the setup process, administrators can refine this or select more fields from these or related tables to increase the relevance of Copilot responses. This can apply to summaries across opportunities, leads and accounts, as well as fields used for Copilot ‘recent changes’ questions.

However, enabling more fields to work with Copilot will increase the overall transcript file size and ultimately add to Dataverse storage consumption.

Copilot Auditing Requirements

Additionally, for Copilot in Dynamics 365 Sales, audit history is necessary to show recent changes to leads, opportunities and any related tables.

If the auditing function isn’t active for these tables, you’ll be prompted to enable it during Copilot’s setup. As a result, this additional auditing will use a separate part of your environment’s Dataverse storage capacity.

Customising Copilot Transcripts Retention

By default, Microsoft implements a default 30-day retention policy for Copilot transcripts with an automated bulk deletion. This process helps mitigate storage utilisation, balancing storage use without manual oversight.

Administrators can adjust user Copilot transcript retention periods to meet specific needs. For example, businesses with agents and sellers using Copilot can reduce Dataverse storage demands by reducing the standard 30-day period. Conversely, extending this timeframe might be necessary to fulfil certain auditing and compliance requirements.

You can tailor your approach based on factors such as the fields Copilot will check, your available storage capacity or the need to adhere to industry-specific regulations for data retention. This proactive strategy ensures your transcript management effectively supports storage and compliance requirements.

Azure Blob Storage for Long-Term Transcripts Retention

For organisations that need to retain Copilot user chat transcripts long-term, Azure Blob Storage offers a cost-effective alternative to Dataverse.

That’s because Azure Blob provides scalable and secure storage at significantly lower costs. By integrating Blob storage, organisations can implement flexible retention policies while benefiting from potential savings, making it an attractive option for balancing budget considerations with large-scale storage demands.

Find out more by reading our article: Reduce Storage Costs with Azure Blob Storage.

Helping You Optimise Your Dataverse Storage

ServerSys is here to assist you in managing your cloud storage capacities, ensuring you can focus on your core priorities without the stress of unexpected costs.

When managing Copilot chat transcripts, our consultants will provide solutions to support your data needs efficiently. Our team is always ready to help you configure Copilot to ensure this avoids Dataverse storage issues.

Contact us today to optimise your Dataverse storage.